The growing wave of disinformation propagated through online platforms and social networks has pushed us all to be more discerning when it comes to content. But do any of us employ the same skepticism when answering a phone call, receiving an email, or on a video chat? Deepfakes are the growing fraud phenomenon that mean we may soon have to do exactly that. We ask what deepfakes really are, why they are a concern for businesses everywhere, and what can be done to protect against this new wave of cybercrime.

What are deepfakes?

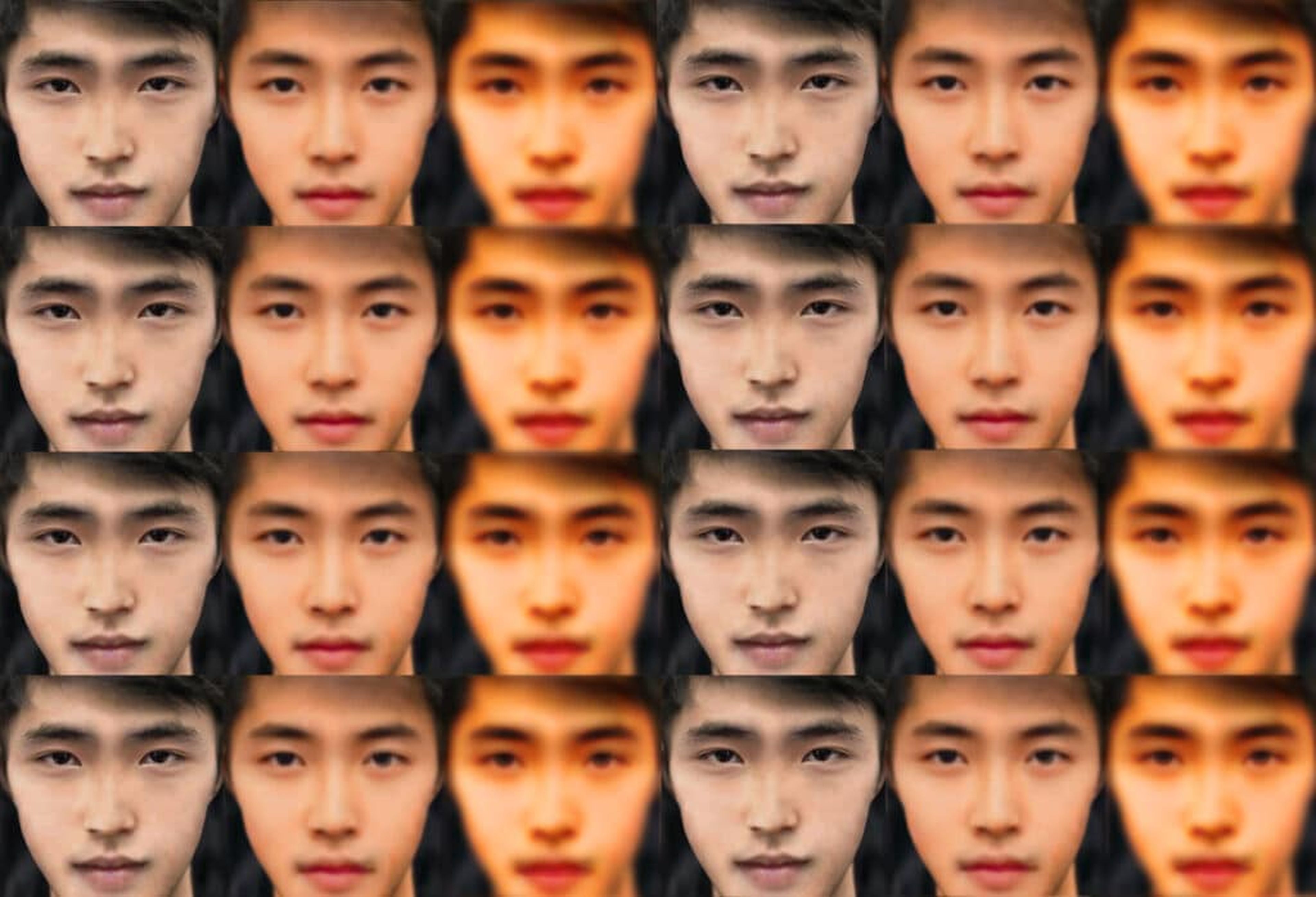

A subset of artificial intelligence (AI) technology, deepfakes are a portmanteau of ‘deep learning’ and ‘fakes’. Deep learning is the method behind the most advanced deepfakes – algorithms that can learn and make independent, intelligent decisions, adapting and becoming more powerful with each piece of information they encounter.

There are two types of deepfake:

The first, sometimes known as ‘cheapfakes’, are relatively harmless, often humorous, and spread virally on the internet the same way a meme might. These days, anyone with access to a smartphone could make a cheapfake in a matter of minutes. Celebrity impressions, TikTok videos, and pranks often use cheapfake technology to manipulate video footage for entertainment purposes, and they are openly false and rarely mistaken for the real thing. Open source material and app software is readily, and cheaply, available for any amateur interested in creating such content.

The second type of deepfake is more nefarious. Using advanced machine learning technology far superior to that of cheapfakes, true deepfakes are created with the intention of spreading doubt or convincing their audience that they are true. They have been used to present political or business leaders saying or doing something they haven’t and can be employed by scammers to defraud or infiltrate organizations under a veil of legitimacy.

Assessing the deepfake threat

Although recent media attention has painted deepfakes in a negative light, deepfakes can also be used for legitimate purposes to benefit businesses in numerous ways.

This summer, advertising giant WPP released corporate training videos featuring AI hosts. The computer-generated avatars address audiences in their native language and can be tailored to represent different ethnicities, genders, and cultures without ever hiring a single actor or production company. Given the restrictions on movement seen so far this year, deepfakes can be a useful tool when physically bringing people together poses challenges.

Artificial intelligence company Rosebud AI has built their business model around believable deepfakes. Last year, the firm released stock imagery of 25,000 computer-generated people, and offers extensive editing capabilities to its clients.

All image and video creation will be done via generative methods in five years

Examples of deepfake technology being used for legitimate purposes also include Hollywood stunt doubles and special effects, such as those seen in the Star Wars movie franchise, as well as a recent malaria charity campaign by footballer David Beckham in which he delivered the appeal in nine different languages. AI personas are even becoming influential in their own right, with talent agency CAA signing Lil Miquela, a computer-generated Instagram influencer with over 2 million followers who featured in a Calvin Klein campaign in 2019.

However, as with every technological advancement, there will always be bad actors who seek to use its capabilities for personal gain or manipulation. Journalists and activists have suffered deepfake harassment, and the spreading of political disinformation is a key concern as deepfakes become more advanced; the most widespread deepfake of 2016 purported to show the Pope endorsing Donald Trump for President.

It’s estimated that fake news already costs around $78 billion annually, but for individuals and host platforms, the true cost of deepfakes is counted in lost credibility. Media outlets and social media platforms have suffered a backlash due to the proliferation of deepfakes on their sites, with Facebook only able to catch 65% of deepfakes despite using the latest detection technology.

How deepfakes hurt businesses

As the current primary targets of deepfakes are celebrities and politicians, businesses may be inclined to think of deepfakes as a far-off threat unlikely to impact on their operations.

However, over three quarters (77%) of cyber security decision makers fear deepfakes will be used to commit fraud

These fears may well be justified; last year, Israeli police arrested three men accused of conning a businessman out of €8M by using deepfake technology to impersonate a French foreign minister and building a replica of his office. The CEO of a UK energy firm also recently fell victim to a deepfake attack where he authorized the transfer of €200,000 after hearing a computer-generated version of his boss’ voice instructing him to make the payment.

AI’s ability to learn rapidly means that, if given enough source material to extrapolate from, a person’s speech cadences and intonation, their gait when walking, their manner of expression, and even their individual quirks and facial muscle movements can all be imitated with frightening accuracy. A Buzzfeed experiment proved that even people’s own mothers can’t tell the difference between their voice and a deepfake version. With such credible forgeries, it’s easy to see how deepfakes could be used to extort, blackmail, demand payments, or enable telephone-enabled phishing attacks with spoofed caller ID.

Deepfake videos in particular pose challenges for businesses; video is often still accepted as better ‘proof’ of an event than still images are, and so a video with a C-suite executive announcing fake company news or sharing views in opposition with company policy could be used to attempt stock market manipulation. Even if there is some doubt over a video’s authenticity, this doubt can be enough to cause volatility and sow seeds of mistrust among investors, customers, or the public.

With such believable fakes and technological difficulties in identifying them, what can businesses do to safeguard against deepfake fraud?

For companies seeking to do more with what they have amidst challenging market conditions and a somewhat uncertain economic future, enhancing productivity should be their first step.

Deepfake defenses

Human defenses: Awareness and education are the first line of defense against deepfake fraud. Training team members to understand the threat, tell-tale signs such as unusual messaging, urgency or unexpected requests, and the potential ramifications of a deepfake attack should be part of companies’ cyber security training programs.

Instilling a healthy dose of skepticism and ensuring that verification takes place before any financial or data transactions are actioned can help to mitigate the risk of successful deepfake fraud. However, since deepfakes can be just as credible when using video technology, two-factor verification should consider that even a video call may not be proof enough of authenticity for businesses.

Technological defenses: Technological solutions often spring up in response to threats, meaning that they are usually one step behind. With each new defense, cyber criminals change tactics and methods, so technological efforts are ongoing in the fight to contain them. Even the Pentagon, through its Defense Advanced Research Projects Agency (DARPA) is now working with some of the USA’s biggest research institutes to come up with a feasible deepfakes solution. Technology giants such as Microsoft are also actively working on solutions to safeguard against deepfakes, although these tools are in their early stages.

Released in September this year, Microsoft’s Video Authenticator tool can analyze a photo or video and produce a percentage chance that the media has been manipulated. Called a ‘confidence score’ by Microsoft, each frame of a video can be assessed for the likelihood of manipulation. Such a tool can be complimented by using invisible watermarks on owned video or images, although in this era of mobile phone footage, watermarks’ effectiveness may be limited at best.

Software firm Adobe has developed attribution data tools to allow users to prove content isn’t fake, but this only works for those interested in creating ‘true’ content; such a tool would only be effective through mass adoption and is unlikely to see adoption by amateur content creators.

Policy defenses: As well as having robust training programs in place, companies can reduce the likelihood of a successful deepfake attack by ensuring that their operations are as transparent and upfront as possible.

Breaking down information silos and facilitating better communications between teams, departments, and geographies means that team members can more accurately gauge when suspicious requests or unusual statements are made: ensuring that as many people as possible within an organization are aware of the correct information makes it far easier to identify, react to, and contain any malicious media.

Having a communication strategy in place to refute deepfakes will also benefit companies in the event that they have to deny or disprove any media that enters the public domain. Being able to rapidly issue a statement or press release can help to slow or avoid any adverse effects on the company, its stocks, or prominent individuals within an organization.

It is important to remember that deepfake technology is still in its relative infancy. First seen around 2017, the coming years are likely to see a significant evolution in the skill, effectiveness, and tenacity of deepfake creators as well as the technology itself. A second generation of deepfakes will no doubt require an even more robust defense strategy; the best companies can do for now is to maintain awareness of technological developments and cyber crime methods, and to have the right people in place to take action when needed.

Mantu’s practice in Innovation means we are at the cutting edge of the latest technological developments; our team creates disruptive solutions to answer the challenges of today and tomorrow.

For more information, visit mantu.com.